We are awash with pseudoscientific claims that look scientific, dress scientifically, sound sciency, but in reality are not. We need never look too far nor too hard to find an example, and so clearly such public popularity should perhaps cause us to pause and wonder why it is like this, why do so many cling on vigorously to ideas and claims that simply do not withstand any serious analysis because they don’t have any real evidence to back them up.

We are awash with pseudoscientific claims that look scientific, dress scientifically, sound sciency, but in reality are not. We need never look too far nor too hard to find an example, and so clearly such public popularity should perhaps cause us to pause and wonder why it is like this, why do so many cling on vigorously to ideas and claims that simply do not withstand any serious analysis because they don’t have any real evidence to back them up.

Dr. Sian Townson (who is on twitter as @siantownson) has a nice article within the UKs Guardian that tackles this issue head on.

It sets the scene brilliantly …

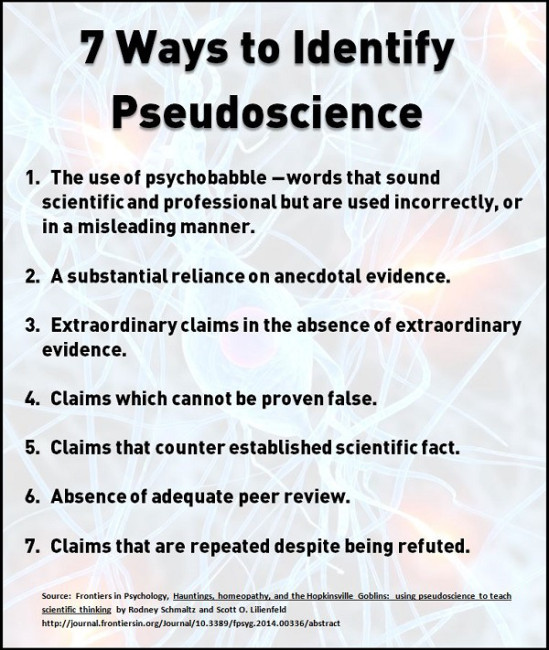

Pseudoscience is everywhere – on the back of your shampoo bottle, on the ads that pop up in your Facebook feed, and most of all in the Daily Mail. Bold statements in multi-syllabic scientific jargon give the false impression that they’re supported by laboratory research and hard facts.

Magnetic wristbands improve your sporting performance, carbs make you fat, and just about everything gives you cancer.

… and then moves on to the heart off the matter – basically the human psychology of which it is perhaps all a symptom – oh yes, quick side note, I quite enjoyed her dig at the Daily Mail in the opening sentence.

I’ve been here before

Last November I was writing about a study that explored why some are inclined to embrace Bullshit as truth. The conclusion that they reached was that …

Across multiple studies, the propensity to judge bull- shit statements as profound was associated with a variety of conceptually relevant variables (e.g., intuitive cognitive style, supernatural belief). Parallel associations were less evident among profundity judgments for more conventionally profound (e.g., “A wet person does not fear the rain”) or mundane (e.g., “Newborn babies require constant attention”) statements. These results support the idea that some people are more receptive to this type of bullshit and that detecting it is not merely a matter of indiscriminate skepticism but rather a discernment of deceptive vagueness in otherwise impressive sounding claims.

Now the reason that I’m highlighting this latest Guardian article is that it builds upon that and draws out a couple of cognitive biases that truly do explain what is going on insider our heads, and note that I’m not talking about “them”, because I’m including myself, and so this is about “us”, as in all of us, because nobody is immune (even if we truly think we are).

So let’s take a quick tour through four of these biases that she draws out.

1. Sunk cost fallacy

This is one that explains rather a lot, and she explains it as follows …

…is the reason that people who have already wasted money on tickets to a terrible film also waste their evening watching it. It can be the reason that people chomp their way through terrible food or get married when the relationship has already soured – it’s the urge to justify previous decisions using the next one. And it means that if people have put their weight behind a belief, they are invested in it, and are likely to fight its corner.

It explains so much more as well. Once you have invested in something emotionally, no quantity of factual information trumps that and so often you will encounter people who appear to be completely immune to reality. The classic example is perhaps creationism, and so even after a countless number of visits to a Natural History museum or a clear concise presentation of the facts , nothing changes, because the creationists have deeply invested in the idea at an emotional level and not at an intellectual level at all.

Key Point: the issue here is a not a lack of information.

2. Confirmation and Selection bias

This is a consequence of the previous bias above. When invested in an idea emotionally then …

we look for evidence to support a theory, and ignore evidence to the contrary. Given the several million individually observable things that happen to you every day, it’s easy to pick one to prove an idea you’ve already become attached to, whether superstition or stereotype.

… and so we lull ourselves into thinking we are right because we find things that confirm something. In essence, this means starting with a conclusion and then working backwards – “Climate change is a myth, just look at all the snow outside … “, while happily ignoring the actual data that tells us a rather different story.

3. Clustering Illusion

Sitting between your ears is an incredibly powerful pattern recognition engine. It is like that because quite obviously being able to rapidly identify patterns gave us as a species a distinct survival advantage. For example working out that animals go to watering holes leads to the idea that hanging out there to hunt game would be a life and death insight, and our ancestors survived because they could pick out such patterns.

But this ability to spot patterns can also work against us.

Seeing an apparent correlation can make it very tempting to think that there is some causal relationship. To give you a silly example, between 1820 and now the number of pirates has decreased. In that same timeframe, we can observe a warming trend, hence obviously the solution to Global Warming is to simply increase the number of pirates because their decline has driven up global temperatures.

4. Dunning–Kruger Effect

This is beautifully summed up in just one line …

The less you know, the more likely you are to perceive yourself as an expert

I well recall an elderly teacher in school once explaining that we most probably all felt that we knew everything, but that over time this would change, and my immediate 11 year old mind leaps to “But he is wrong, because I do know everything“.

We can often very easily fool ourselves into thinking that our knowledge on a specific topic is wholly complete and that we have a truly deep understanding.

One of the painful things about our time is that those who feel certainty are stupid, and those with any imagination and understanding are filled with doubt and indecision.

—Bertrand Russell, The Triumph of Stupidity

How can we possibly rise above all this?

There are two aspects to this. One is reaching out and effectively communicating in a manner that lets people join up the dots and reach a new insight for themselves …

If we’re going to dispel myths, we need to improve our ability to communicate, with creative approaches such as hands-on activities that encourage self-directed learning. Rather than just trying to stamp out misunderstandings, we need to offer people something else to believe in.

The second aspect is to also look inwards and to also question our own underlying assumptions, to be conscious of our own cognitive biases and to be aware that the one person who will in all probability successfully fool you far more than anybody else will is yourself.