There is a meme going around that consists of a sweatshirt slogan that reads …

There are of course different approaches to modelling and while some turn out to not be all that good, others are observed to very accurately model the things that we observe. So what happens when we then run the most accurate models forwards, what do we learn?

We have the results of a new study

Patrick Brown and Ken Caldeira of the Carnegie Institution for Science in Stanford, Calif have published the results of their recent study that attempted to answer such a question. Rather obviously they are not the first to do this. The entire point of creating a climate model is to then run it forwards and work out what comes next. As you might perhaps anticipate, taking the projections from a collection of climate models and then combining it all together is rather common, that’s what the IPCC (the UN’s climate panel) does.

This study however does do something new.

There are two things that make the accuracy of climate models potentially uncertain.

- Exactly how great will our Greenhouse gas emissions continue to be? We simply don’t know, so the best we can do is to model what happens for various different degrees of greenhouse gas emissions. These are commonly referred to as the RCP scenarios. (Representative Concentration Pathways).

- The other concerns the accuracy of a climate model and just how accurate it is. Has something rather important been missed or something rather complex not been handled to the appropriate degree of complexity?

To tackle the second, the essence of what they have done is this …

it is possible to use observations to discriminate between well and poor performing models

… or to put that another way, let me quote one of the authors of the published paper …

“We know enough about the climate system that it doesn’t necessarily make sense to throw all the models in a pool and say, we’re blind to which models might be good and which might be bad,”

The study

With the title of “Greater future global warming inferred from Earth’s recent energy budget“, you can perhaps now project ahead and see where this is going. It has been published within Nature but unfortunately is blocked by a paywall.

OK, so let’s cut to the chase here, the bottom line of their study is this …

Our results suggest that achieving any given global temperature stabilization target will require steeper greenhouse gas emissions reductions than previously calculated.

In other words, the models that did the best job of correctly predicting what we can actually observe also predict a greater degree of warming.

What exactly did they find?

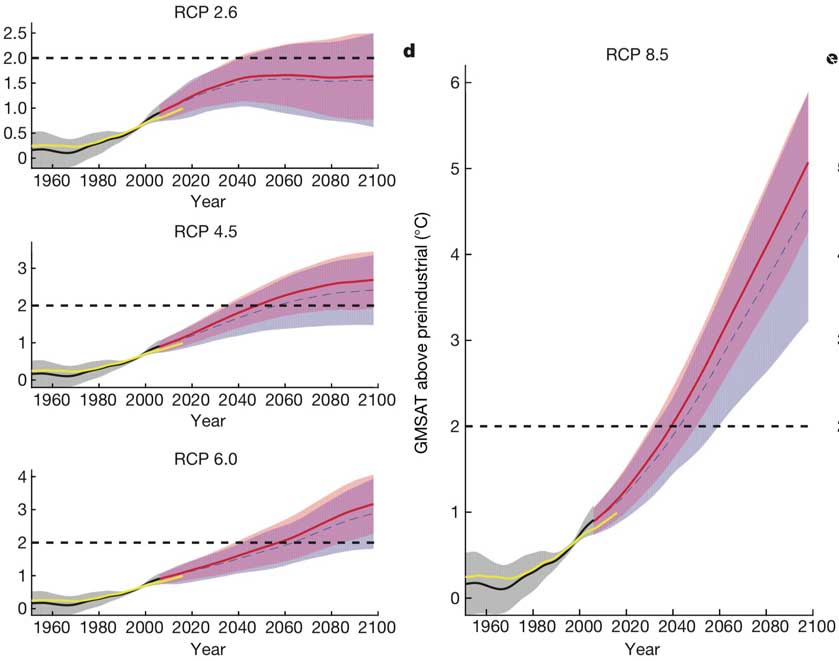

Below are the charts from the paper for the various different RCP scenarios …

Toss all the models into the mix and what you get is the dotted blue line (the raw unconstrained conclusion). If you then consider the Observationally constrained, then what you get is the red line.

our results add to a broadening collection of research indicating that models that simulate today’s climate best tend to be the models that project the most global warming over the remainder of the twenty-first century

This is all part of the on-going conversation

As is highlighted within the Washington Post by Chris Mooney, the response to this has been as follows …

it appears that much of the result had to do with the way different models handled one of the biggest uncertainties in how the planet will respond to climate change.

“This is really about the clouds,” said Michael Winton, a leader in the climate model development team at the Geophysical Fluid Dynamics Laboratory of the National Oceanic and Atmospheric Administration, who discussed the study with The Post but was not involved in the research.

Clouds play a crucial role in the climate because among other roles, their light surfaces reflect incoming solar radiation back out to space. So if clouds change under global warming, that will in turn change the overall climate response.

How clouds might change is quite complex, however, and as the models are unable to fully capture this behavior due to the small scale on which it occurs, the programs instead tend to include statistically based assumptions about the behavior of clouds. This is called “parameterization.”

But researchers aren’t very confident that the parameterizations are right. “So what you’re looking at is, the behavior of what I would say is the weak link in the model,” Winton said.

This is where the Brown and Caldeira study comes in, basically identifying models that, by virtue of this programming or other factors, seem to do a better job of representing the current behavior of clouds. However, Winton and two other scientists consulted by The Post all said that they respected the study’s attempt, but weren’t fully convinced.

Bottom Line

It’s an interesting study. When it comes to the conclusion then there are skeptics , but they still welcome this work …

“It’s great that people are doing this well and we should continue to do this kind of work — it’s an important complement to assessments of sensitivity from other methods,” added Gavin Schmidt, who heads NASA’s Goddard Institute for Space Studies. “But we should always remember that it’s the consilience of evidence in such a complex area that usually gives you robust predictions.”

Schmidt noted future models might make this current finding disappear — and also noted the increase in warming in the better models found in the study was relatively small.

If however what this study suggests is eventually accepted as correct, and that has not yet happened, then it will lead to rather a lot of sleepless nights for policymakers because they just potentially had 15% of their potential carbon budget wiped out.